Hi All,

Has anyone had any experience with “Invalid response size”?

I receive this message from Gemini 2.5, OpenAI 4.0, 4.5, and Claude 3.7. The same agent runs successfully with OpenAI o3 and Claude 3.5. The prompt and system prompt are nowhere near the supported token size.

I can only attach on image, but its the same exact response for all the above listed LLMs.

Thanks in advance for any help!

Hi @mattklein,

Thanks for the post!

From the screenshot, it looks like your Max Response Size is set to 100,000 tokens, while Gemini 2.5 Pro only supports up to 65,536 tokens:

Make sure to adjust the Max Response Size in both the Agent and Block Model Settings to stay within the supported limit.

Ah, thank you Alex! When I select the model, the Max Response size seems to be set to what the model supports. (e.g. 65536 in the case of Gemini 2.5). However, I do see that 100,000 in the debugger output. Is there another place to set the response size? I’m not seeing it on a block-by-block basis.

Hi @mattklein,

By default, blocks use the Underlying Model set in your Agent, but you can also assign different models to individual blocks. If you’ve done that, make sure each block’s Max Response Size is set correctly as well:

Also, make sure to click the Publish button in the top right corner of the Agent IDE to save all changes.

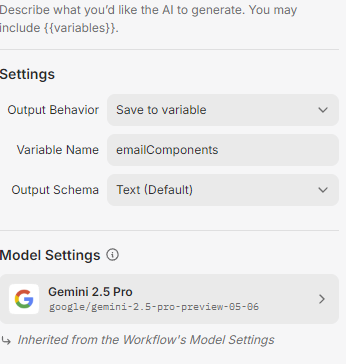

Thank you, ok. All blocks are set to use the default. This is the Generate Email Components block for instance. Not sure where that 100,000 is coming from.

Hi @mattklein,

Could you please publish the Agent and record a Loom of a sample Run? Please include the Debugger in the recording so we can take a closer look at what might be causing the issue.

Thank you Alex, here’s a recording: Clips

Hi @mattklein,

Could you walk me through the steps you’ve taken so far?

From what I can see in the Debugger from your video, the model has been updated to GPT 4.5, but the Max Response Size is still set to 65,536 tokens, while the model supports up to 8,000 tokens.

The only thing I’ve done is switched the model at the highest level. It seems like the system is carrying over the max response size from the previous model. Prior to setting the Model Settings to ChatGPT 4.5, I had chosen Gemini 2.5.

Hi @mattklein,

Thank you for letting me know, and apologies for the inconvenience.

Is there any chance you could capture that issue in a recording? For example, select a model, publish the agent, then refresh the page and show if the Max Response Size reverts to an older value. I’m trying to replicate this on our end, and seeing the exact steps you’ve taken would really help.

Thanks again Alex, I think this might help you:

I see I can probably adjust this accordingly now.

Is there a way to set the default max ouput size to be no higher than the model limit size? A small point but would save some effort and reduce potential errors.