Hello @Alex_MindStudio again ![]()

We’d like to know the best way to implement three capabilities for our clients using MindStudio:

1. Monthly Usage Report (Per Client / Per Agent)

We need a monthly usage report that shows:

-

Interactions/runs per day, per agent, and per client

-

Monthly totals and, if possible, cost/expenses per agent

-

Delivered automatically by email to:

-

Our internal team (configurable addresses)

-

The client (one or more email addresses)

-

Questions:

-

Is there a built-in way to generate and email such reports?

-

If not, do you provide an API or export endpoint with this level of usage detail?

2. Usage Alerts (Per Agent / Per Client)

We define a monthly limit of interactions/runs per client/agent and bill per interaction. We need alerts when we are close to those limits.

Requirements:

-

Ability to set a monthly usage limit per agent (and ideally per client)

-

Email alerts when usage reaches thresholds (e.g., 70%, 85%, 95%) and when the limit is exceeded

-

Alert content: client ID, agent name/ID, current usage vs. limit

Questions:

- Can we get real-time or near real-time usage via API or webhooks so we can implement this ourselves?

3. Observability / Quality Control (“Human/AI in the Loop”)

We want to review and validate agent responses, especially at the start.

Option A – Human in the loop:

-

Access to conversation logs (user question + agent answer).

-

Ability to review randomly or selectively.

-

Ideally, review inside MindStudio or receive selected interactions by email for manual evaluation.

Option B – AI in the loop:

-

A second “evaluator” agent that:

-

Receives the conversation and the main agent’s response.

-

Scores response quality (e.g., 0–10).

-

Triggers an email notification when the score is below 8 or the answer seems dubious.

-

Questions:

-

Is it possible to configure such reviewer/evaluator workflows natively in MindStudio?

-

Any examples or templates for evaluation/monitoring agents?

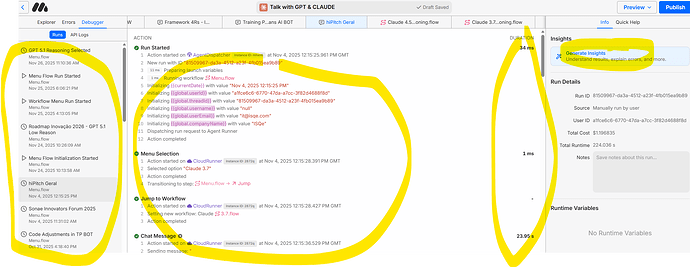

Logs / Debugger Data Export

Below there’s a screenshot of the debugger view we see in MindStudio.

Question:

-

Is there a way to export these logs (prompts, responses, metadata) so they can be:

-

Downloaded in bulk, or

-

Sent to another agent/service for automated analysis?

-

Any documentation or examples covering usage exports, alerts, and reviewer workflows would be very helpful.

Best,

Fernando